Mastering Azure AI Search: The Unsung Hero Behind RAG and Enterprise Intelligence

How Microsoft’s AI-native search engine powers smarter retrieval, enriches unstructured data, and anchors enterprise-grade GenAI apps.

1. Azure AI Search – A Strategic Powerhouse for the GenAI Era

Azure AI Search isn’t just another cloud service—it’s quickly becoming the foundation for Retrieval-Augmented Generation (RAG) architectures and intelligent enterprise search systems. At a time when generative AI apps demand factual grounding, contextual awareness, and performance at scale, this managed service brings together full-text search, vector embeddings, semantic ranking, and AI enrichment pipelines under one roof.

Built on years of evolution (from Azure Search ➜ Cognitive Search ➜ now Azure AI Search), Microsoft has reimagined search from a static keyword box into a dynamic, AI-enhanced retrieval engine. Today, Azure AI Search plays a central role in transforming unstructured content—documents, images, PDFs, web pages—into knowledge sources that power LLM-based chat experiences, virtual assistants, and multi-modal interfaces.

🧠 Architect Tip: Treat Azure AI Search as a smart, contextual memory layer for your LLMs—not a traditional search bar.

2. The Engine Room: Full-Text, Vector, Semantic & Hybrid Retrieval

Full-Text Search leverages Apache Lucene to tokenize, index, and rank documents using BM25. Azure enhances this with scoring profiles, custom analyzers, and boosting options that let architects fine-tune the relevance for business-specific use cases. For example, you can prioritize documents where a keyword appears in the title or boost lower-priced products.

Vector Search transforms this experience entirely. Powered by embeddings (via OpenAI, SBERT, or CLIP), Azure AI Search supports similarity search, multilingual queries, and multimodal content like image-text fusion. Vectors allow conceptual understanding—retrieving "canine" for a query like "dog," even across languages.

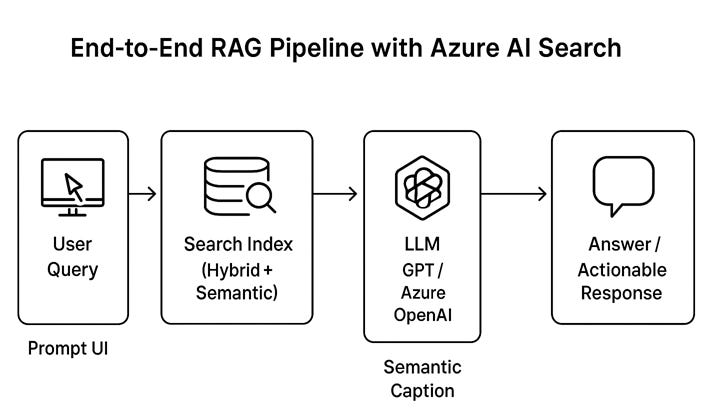

Semantic Search adds another AI-native layer, reranking top results using deep learning models trained by Microsoft (from Bing). It returns semantic captions and direct answers, drastically improving user experience and reducing hallucinations in LLM outputs.

Hybrid Search blends the strengths of both—precision from full-text, flexibility from vectors—using Reciprocal Rank Fusion (RRF) to merge results.

🧠Developer Insight: Always lean toward hybrid + semantic if your app powers AI chat, RAG, or knowledge discovery. It improves grounding, recall, and factual accuracy.

3. AI Enrichment: From Raw Data to Structured Knowledge

Azure AI Search’s real magic lies in how it enriches unstructured data. Its cognitive skills—like OCR, language detection, sentiment analysis, key phrase extraction—transform blobs, PDFs, and images into structured fields.

Beyond built-in skills, developers can implement custom enrichment using Azure Functions. For example, add domain-specific classification for legal contracts or financial documents during ingestion.

Knowledge Mining becomes the end-product: searchable indexes loaded with semantically enriched, filtered, and vectorized content. These can be used not only for search, but for downstream analytics, compliance, and even AI training sets.

🧠Architect Tip: Store enriched content in a Knowledge Store for long-term use, analytics, or downstream ML.

4. Building the Architecture: Indexers, APIs & RAG Workflows

The foundational unit in Azure AI Search is the search index—a high-performance data structure storing tokenized, vectorized, and enriched data. You define fields (searchable, filterable, facetable), and the rest is optimized for millisecond queries.

Indexers (pull model) automate ingestion from Blob Storage, Cosmos DB, Azure Tables, and others. They trigger skillsets, perform chunking, enrichment, and push data to the search index.

APIs support full Lucene syntax, vector queries, filters, facets, and semantic reranking—all composable for hybrid and RAG use cases.

In a RAG pipeline, Azure AI Search acts as the retrieval engine. It provides high-quality, contextually relevant passages that can be passed as grounding data to LLMs (e.g., Azure OpenAI). However, orchestration (prompt flows, state handling) must be custom-built.

🧠Dev Insight: Azure AI Search is not a chatbot engine—it’s the retrieval muscle behind the scenes.

5. Real-World Use Cases: AI Assistants, VoiceRAG, Legal Search

Azure AI Search is being used across industries:

VoiceRAG: Combine with speech models to power real-time question-answering bots.

Internal Chatbots: Use your PDF libraries, SharePoint files, and CRM data to power internal copilots.

Legal Discovery: Firms like UBS use it to rapidly search legal clauses with high precision.

Healthcare: BILH used hybrid search across their EHRs to deliver accurate results to practitioners.

🧠Architect Tip: The more fragmented your data is, the more valuable Azure AI Search becomes.

6. Scaling & Optimization: More Than Just More Replicas

Performance isn’t just about throwing more hardware. Azure AI Search performance depends on:

Index schema design: Avoid over-attributing fields (searchable + filterable + sortable) unless necessary.

Query design: Use

$selectto reduce payload, avoid expensive fuzzy/prefix queries unless required.Capacity planning: Use partitions for storage scale, replicas for throughput. Monitor with Azure Insights.

Caching: Use Azure CDN or Redis for caching popular queries.

🧠Developer Tip: Avoid fuzzy + prefix queries on high-cardinality fields. It kills performance.

7. Enterprise-Grade Security & Compliance

Azure AI Search ticks all the compliance boxes—GDPR, HIPAA, ISO 27001—with strong encryption and access control:

Data at Rest: AES-256, FIPS 140-2 compliant.

Customer-Managed Keys (CMK): Full control, but adds 30–60% query latency.

Private Endpoints: Eliminate public exposure using Azure Private Link.

RBAC & Managed Identity: Fine-grained access control for index operations and outbound connections.

🧠Architect Tip: Only use CMK where required—otherwise stick with service-managed keys.

8. Strengths, Limits, and the Competitive Arena

Strengths:

Deep Azure integration (Storage, Cosmos, ML, Functions, OpenAI)

Hybrid + Semantic search baked-in

Built for RAG, not just traditional search

Limitations:

Complex setup for beginners

Premium pricing

Still requires orchestration for full LLM workflows

Compared to Others:

AWS Kendra offers strong ML search but limited 3rd-party connectors

Google Cloud Search is great for Google Workspace

Elasticsearch / Solr are powerful, flexible—but fully self-managed

Meilisearch / Qdrant are fast and modern but less enterprise-grade

🧠 Developer Insight: Use Azure AI Search when you need LLM grounding + enterprise compliance + AI-native enrichment in one place.

9. Final Thoughts: Don’t Just Search—Understand

Search used to mean matching keywords. Azure AI Search redefines that by blending lexical precision, semantic intelligence, vector similarity, and AI enrichment—all inside a scalable, secure, cloud-native service.

If you’re building modern AI products, especially in Azure, don’t start with a database. Start with Azure AI Search.

"In the age of generative AI, relevance isn't a luxury—it’s a necessity. Azure AI Search helps you find what matters, not just what matches."

🎙️ Prefer listening over reading?

I’ve also recorded a deep-dive podcast episode breaking down Azure AI Search: Powering Enterprise AI and RAG.

👉 Listen to the full episode here

Happy Reading :)